Functional vs. Unit Testing for the Browser

Back in 2013, I wrote a blog post in which I argued for prioritizing functional testing over unit testing in front end development. “Unit testing makes sure you are using quality ingredients. Functional testing makes sure your application doesn’t taste like crap” I proclaimed, quite pleased with myself.

Over the next two years, I acted on this misguided principle, erecting a towering, rickety functional test suite which strove to cover nearly every combination of user actions I could think of. At its zenith, the test suite covered hundreds of user interactions and clocked in at over 20 minutes to complete. As it expanded, small changes to the application broke vast swaths of tests in ways that were increasingly difficult to trace. Race conditions plagued our team with tests that failed nondeterministically, until at last the entire structure collapsed under the weight of my Ozymandian ambitions.

In the year since the collapse of my overly ambitious functional test suite, I’ve come to sing a quite different tune about the respective roles of functional and unit testing in front end development. Today, I write unit tests as I develop new features, and mostly relegate my functional testing to a set of quick, non-exhaustive smoke tests which simply ensure that a handful of critical features remain unbroken.

So where did things go sideways? My cooking analogy was so apt! What could possibly be wrong with relying on functional testing over unit testing? Turns out quite a lot.

There are ten thousand blog posts out there extolling the virtues of TDD/BDD, so I’m going to focus here on what I’ve learned from my functional testing misadventures, and how we found a nice balance between the two.

Functional testing is a poor first line of defense

Functional testing presents a lot of challenges which make it a poor go-to choice for testing your application’s front-end.

Functional testing modifies persistent state

One difficulty we ran into with our functional test was what to do about persistent state. Unlike unit tests, functional tests integrate with an actual database, and can have side effects which live past the end of the test run!

In order to avoid scenarios where the act of running a test can cause future tests to fail, you’ll need to do one of several things:

Write tests that have little or no side effects (defeats purpose of functional testing to a large degree)

Make your tests clean up after themselves (not always possible and hard to do if your test fails)

Reset your entire database before every test run (slow, requires carefully maintaining test database)

We tried all three approaches, but eventually settled on the third approach. With our exhaustive functional test suite in constant flux, we wound up sinking a whole lot of time trying to keep our test database in a very specific state, so that our functional tests could make assumptions about the database. Unfortunately, since (spoiler alert!) our tests were too slow to run continuously in a CI setting, this meant that if someone made an innocuous change to our Liquibase-managed test database, we wouldn’t find out until much later that this change had in fact broken a test which relied on the old state.

Functional testing is slow

With a headless browser executing full page loads against an actual server running against an actual database rendering actual HTML, any functional testing setup is bound to be far slower to run than your unit test suite. If you want to run your functional test suite as part of your Continuous Integration process, this can become a real deal-breaker.

One way to mitigate this is to try to break up your test suite into smaller sets of tests, and parallelize those test runs. Unfortunately, since functional testing rely on a shared persistence layer, it becomes very easy to accidentally write tests which collide when run at the same time!

Functional testing is prone to nondeterministic failures

Functional testing in a browser relies on tools like CasperJS or Nightwatch, which spin up a headless browser, point it at the actual running application, and mock various user clicks and keystrokes. With the many asynchronous operations that take place in a browser application, it is very common for tests to fail nondeterministically as a result of to unforeseen race conditions. This is especially true if you attempt to exhaustively test a complex application.

What we mean by nondeterministic is that a test may pass on one run, but fail on the next. This is far more difficult than a test which fails consistently (i.e. deterministically), since you must figure out how to reproduce the failure before you can figure out why it failed. In something as opaque as a headless browser running your application, figuring out the why part is especially difficult.

When a test failure is more likely to be the result of a poorly written test than bad application logic, you’re not getting a good return on your testing investment.

You can take steps to mitigate nondeterministic failures by writing your tests in a defensive manner (for instance, explicitly waiting for a modal to become visible in the UI before triggering a click on one of its buttons), but it’s all too easy to write a functional test which succeeds 9 times in a row, then fails on the 10th run. When a test failure is more likely to be the result of a poorly written test than bad application logic, you’re not getting a good return on your testing investment.

Choose your toolset carefully

Unlike Node-based testing frameworks like Mocha, Jasmine, or Karma, functional testing tool suites have a tendency to lock you in with their API or platform. This makes it very important to find a tool which has a lot of momentum behind its development and maintenance. Our original functional test suite used CasperJS, a lovely little tool which wrapped around a PhantomJS headless browser and provided a testing API and various utilities. (Note that PhantomJS provides its own non-Node JavaScript runtime environment, which means you cannot use npm modules or Node utilities in your tests)

Unfortunately, it was never a very popular tool, and over time most of its maintainers moved on to other projects. Eventually we ran into a bug where fewer than one in a thousand network requests from inside Casper/Phantom would fail, which of course would cause whichever unlikely test was running to fail inexplicably. We never were able to find a fix for this, and the community didn’t have the resources to address the bug.

This led us to switch over to Nightwatch, which runs in a Node context (yay!) and controls a Selenium server. This means you can test against real browsers, and can even watch your tests run in Chrome or Firefox! The API is very clean, and since all browser interactions are asynchronous by default, it is much easier to reason with than Casper, where some operations were synchronous and others asynchronous. So far we have not run into any issues with random failures or other nondeterministic behaviors, and have been very happy with our choice.

Unit testing was made for this

Many of these challenges are inherent to functional testing, which makes it a poor first choice for testing your browser application. By contrast, unit testing is fast, reliable, doesn’t rely on persistent state, and doesn’t tie you to a particular tool.

But beyond all of that, unit testing has one big advantage that functional testing does not. Unlike functional testing, which only cares what your code does, unit tests also care how it does it.

Unit tests encourage you to write better code

Any developer worth her salt knows that an application can meet its functional requirements and still be an unmaintainable ball of mud. If you’re relying solely on functional tests, you don’t really get any extra incentive to write highly decoupled, DRY code. If you’re disciplined about writing unit tests, you’ll find that the better your code, the easier it is to test.

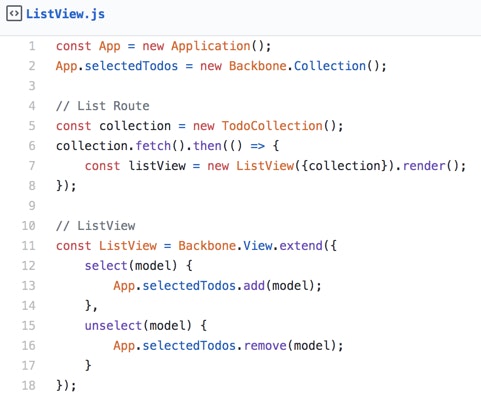

It’s very easy to write modules with dependencies on other pieces of code in ways that aren’t immediately obvious. Let’s look at a somewhat contrived example — a Backbone ToDo application with a List View and a collection of Todo Models, any number of which could be “selected.”

ListView relies on App.selectedConvos, but you wouldn’t know this from looking at its (empty) constructor. In fact, you wouldn’t know that it depended on App.selectedConvos until you exercised select or unselect. You can write your application this way, referencing essentially global variables as you need them. But this causes problems, as modules which do not appear to be dependent on each wind up becoming tightly coupled because of the assumptions they make about the state of the page.

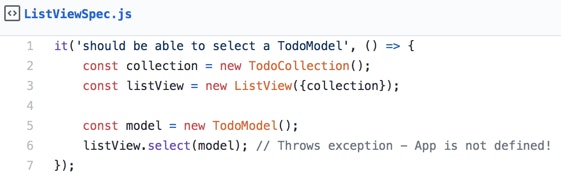

If you were functional testing this page, you would have no indication that anything smelled fishy. But if you’re writing unit tests, this implicit dependency comes to light very quickly:

At line 6, calling the select method on the listView causes an exception to be thrown, due to App and App.selectedConvos not being defined.

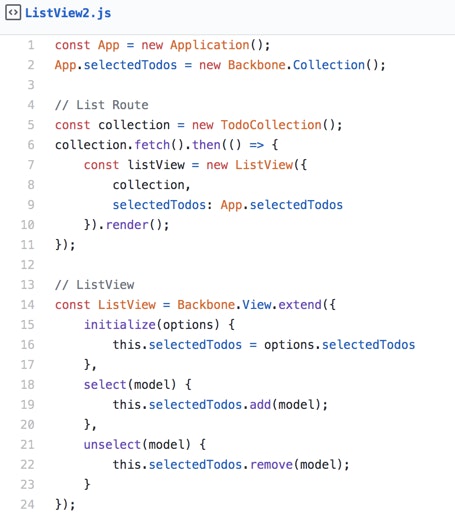

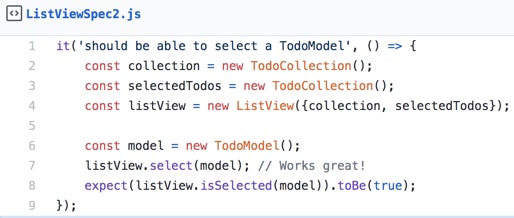

One way you could address this would be to add setup method to create a dummy App and selectedConvos collection, but this smells bad enough that you’ll probably find yourself wondering why you didn’t just pass selectedConvos in to ListView's constructor in the first place. A quick refactoring yields a better, more decoupled solution:

And therein lies the beauty of unit testing, something you could never achieve with functional testing alone. The very act of unit testing pushes you to write better code. It encourages you to write code that is stateless, functional, cohesive, and highly decoupled. Throw in the baked-in advantages of unit over functional testing, and it’s a huge win across the board.

The very act of unit testing pushes you to write better code.

A better place

We’ve come a long way as a team since the old days of functional-first testing. Our unit test coverage has increased tremendously, we catch regressions earlier, and worry less that changes in one place will break something in another. Compared to where we were a year ago, we’re in a really good place.

Embracing unit testing as our default for all components and positioning functional testing for post-deployment smoke testing has helped us increase our code quality and velocity, but that’s not to say it’s without its shortcomings. To find out what those are and how we are working to overcome them, you’ll have to stay tuned until next time!